回调机制:观察、定制与控制智能体行为

引言:什么是回调及其价值

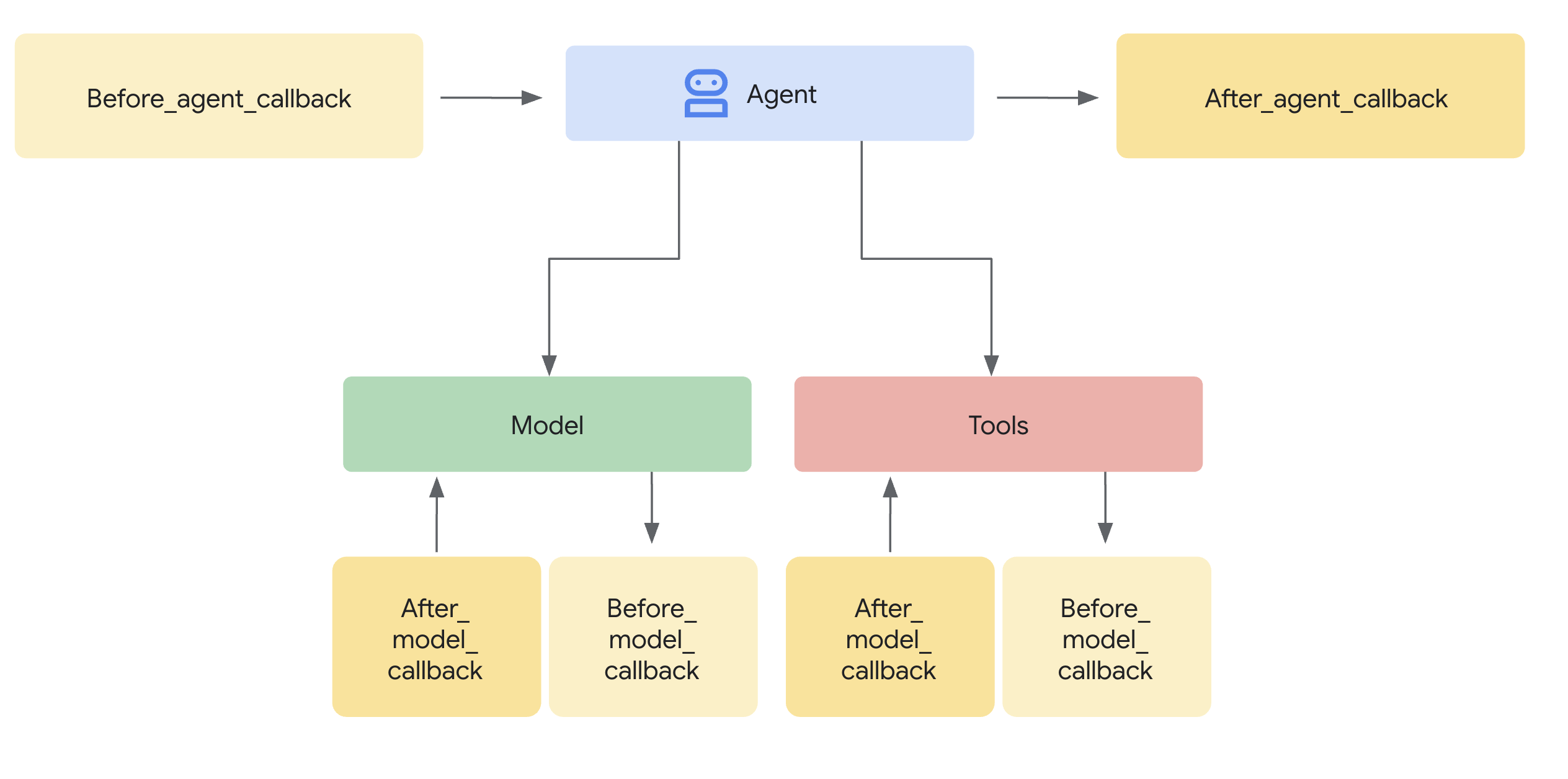

回调是ADK框架的核心功能,它提供了一种强大的机制来介入智能体的执行流程。通过回调,您可以在不修改ADK核心框架代码的前提下,在预定义的特定节点观察、定制甚至控制智能体的行为。

本质解析

回调本质上是由用户定义的标准Python函数。当创建智能体时,您需要将这些函数与智能体关联。ADK框架会在智能体生命周期的关键阶段自动调用这些函数,例如:

- 在智能体主逻辑运行前或运行后

- 在向大模型发送请求前或接收响应后

- 在执行工具(如Python函数或其他智能体)前或完成执行后

核心价值

回调机制能带来显著的灵活性并实现高级功能:

- 观察与调试:在关键步骤记录详细信息以供监控和故障排查

- 定制与控制:修改流经智能体的数据(如大模型请求或工具结果),或根据业务逻辑完全跳过某些步骤

- 安全防护:实施安全规则、验证输入输出、阻止违规操作

- 状态管理:在执行过程中读取或动态更新智能体的会话状态

- 集成增强:触发外部操作(API调用、通知)或添加缓存等特性

注册方式

在创建Agent或LlmAgent实例时,通过将定义好的Python函数作为参数传递给智能体构造函数(__init__)来注册回调。

from google.adk.agents import LlmAgent

from google.adk.agents.callback_context import CallbackContext

from google.adk.models import LlmResponse, LlmRequest

from typing import Optional

# --- Define your callback function ---

def my_before_model_logic(

callback_context: CallbackContext, llm_request: LlmRequest

) -> Optional[LlmResponse]:

print(f"Callback running before model call for agent: {callback_context.agent_name}")

# ... your custom logic here ...

return None # Allow the model call to proceed

# --- Register it during Agent creation ---

my_agent = LlmAgent(

name="MyCallbackAgent",

model="gemini-2.0-flash", # Or your desired model

instruction="Be helpful.",

# Other agent parameters...

before_model_callback=my_before_model_logic # Pass the function here

)

回调机制:拦截与控制原理

当ADK框架运行到可触发回调的节点时(例如调用大模型前),会检查是否为此智能体注册了对应的回调函数。若存在注册函数,框架将执行该函数。

上下文核心

回调函数并非孤立运行。框架会提供特殊的上下文对象(CallbackContext或ToolContext)作为参数,这些对象包含智能体当前执行状态的关键信息,包括调用详情、会话状态,以及可能存在的服务引用(如工件或内存)。您需要通过这些上下文对象来理解当前状态并与框架交互(详见"上下文对象"专章)。

流程控制(核心机制)

回调最强大的特性在于其返回值能影响智能体的后续行为,这是实现执行流拦截与控制的关键:

-

return None(允许默认行为)- 此返回值表示回调已完成其工作(如日志记录、检查、对

llm_request等可变输入参数的微调),ADK智能体应继续正常执行流程 - 对于

before_*类回调(before_agent、before_model、before_tool),返回None意味着将继续执行后续步骤(运行智能体逻辑、调用大模型、执行工具) - 对于

after_*类回调(after_agent、after_model、after_tool),返回None表示直接使用上一步骤产生的结果(智能体输出、大模型响应、工具结果)

- 此返回值表示回调已完成其工作(如日志记录、检查、对

from google.adk.agents import LlmAgent

from google.adk.agents.callback_context import CallbackContext

from google.adk.models import LlmResponse, LlmRequest

from google.adk.runners import Runner

from typing import Optional

from google.genai import types

from google.adk.sessions import InMemorySessionService

GEMINI_2_FLASH="gemini-2.0-flash"

# --- Define the Callback Function ---

def simple_before_model_modifier(

callback_context: CallbackContext, llm_request: LlmRequest

) -> Optional[LlmResponse]:

"""Inspects/modifies the LLM request or skips the call."""

agent_name = callback_context.agent_name

print(f"[Callback] Before model call for agent: {agent_name}")

# Inspect the last user message in the request contents

last_user_message = ""

if llm_request.contents and llm_request.contents[-1].role == 'user':

if llm_request.contents[-1].parts:

last_user_message = llm_request.contents[-1].parts[0].text

print(f"[Callback] Inspecting last user message: '{last_user_message}'")

# --- Modification Example ---

# Add a prefix to the system instruction

original_instruction = llm_request.config.system_instruction or types.Content(role="system", parts=[])

prefix = "[Modified by Callback] "

# Ensure system_instruction is Content and parts list exists

if not isinstance(original_instruction, types.Content):

# Handle case where it might be a string (though config expects Content)

original_instruction = types.Content(role="system", parts=[types.Part(text=str(original_instruction))])

if not original_instruction.parts:

original_instruction.parts.append(types.Part(text="")) # Add an empty part if none exist

# Modify the text of the first part

modified_text = prefix + (original_instruction.parts[0].text or "")

original_instruction.parts[0].text = modified_text

llm_request.config.system_instruction = original_instruction

print(f"[Callback] Modified system instruction to: '{modified_text}'")

# --- Skip Example ---

# Check if the last user message contains "BLOCK"

if "BLOCK" in last_user_message.upper():

print("[Callback] 'BLOCK' keyword found. Skipping LLM call.")

# Return an LlmResponse to skip the actual LLM call

return LlmResponse(

content=types.Content(

role="model",

parts=[types.Part(text="LLM call was blocked by before_model_callback.")],

)

)

else:

print("[Callback] Proceeding with LLM call.")

# Return None to allow the (modified) request to go to the LLM

return None

# Create LlmAgent and Assign Callback

my_llm_agent = LlmAgent(

name="ModelCallbackAgent",

model=GEMINI_2_FLASH,

instruction="You are a helpful assistant.", # Base instruction

description="An LLM agent demonstrating before_model_callback",

before_model_callback=simple_before_model_modifier # Assign the function here

)

APP_NAME = "guardrail_app"

USER_ID = "user_1"

SESSION_ID = "session_001"

# Session and Runner

session_service = InMemorySessionService()

session = session_service.create_session(app_name=APP_NAME, user_id=USER_ID, session_id=SESSION_ID)

runner = Runner(agent=my_llm_agent, app_name=APP_NAME, session_service=session_service)

# Agent Interaction

def call_agent(query):

content = types.Content(role='user', parts=[types.Part(text=query)])

events = runner.run(user_id=USER_ID, session_id=SESSION_ID, new_message=content)

for event in events:

if event.is_final_response():

final_response = event.content.parts[0].text

print("Agent Response: ", final_response)

call_agent("callback example")